< Probability of Wrong People Getting Persecuted >

“What would it take for a global totalitarian government to rise to power indefinitely? This nightmare scenario may be closer than first appears.”

https://www.bbc.com/future/article/20201014-totalitarian-world-in-chains-artificial-intelligence

It would be nightmarish to see conscientious citizens getting mechanically-identified, detained, tortured and killed.

Even more nightmarish is to see wrong citizens facing the same fate.

How probable would it be?

We have no clue, because vendors of face recognition systems would not publicise the empirical data on False Acceptance Rates and the corresponding False Rejection Rates. (for instance, outdoor measurements in the street would be viewed as empirical in the context of this article)

For more about False Acceptance and False Rejection, please click the links

- ‘Harmful for security or privacy’ OR ‘Harmful for both security and privacy

- What we are apt to do

‘Security vs Privacy’ OR ’Security & Privacy’

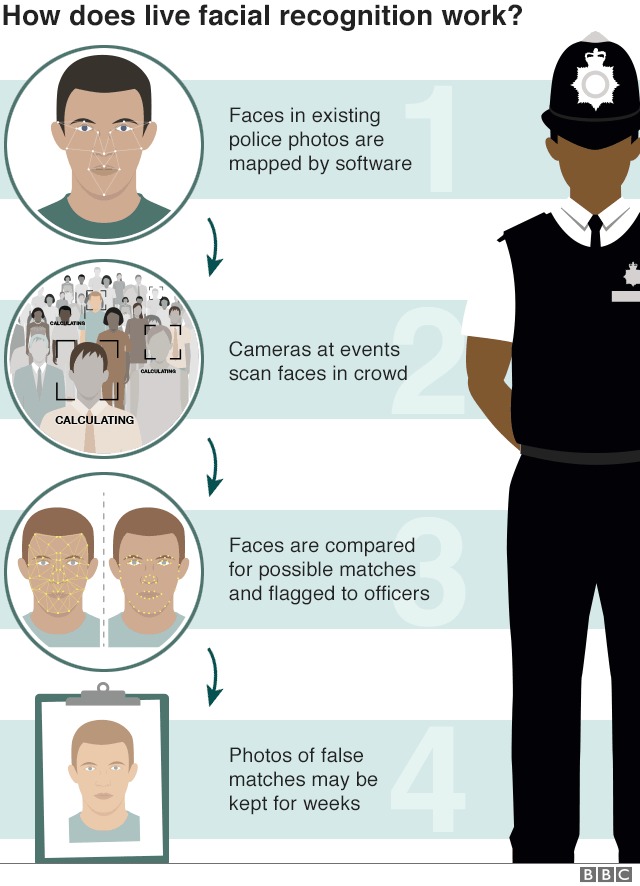

Police facial recognition surveillance court case starts ( https://www.bbc.co.uk/news/uk-48315979 )

I am interested in what is not referred to in the linked BBC report. That is, the empirical rate of target suspects not getting spotted (False Non-Match) when 92% of 2,470 potential match was wrong (False Match).

The police could have gathered such False Non-Match data in the street just easily and quickly by getting several cops acting as suspects, with some disguised with cosmetics, glasses, wigs, beards, bandages, etc. as many of the suspects are supposed to do when walking in the street.

Combining the False Match and False Non-Match data, they would be able to obtain the overall picture of the performance of the AFR (automated face recognition) in question.

1. If the AFR is judged as accurate enough to correctly identify a person at a meaningful probability, the AFR could be viewed as a serious 'threat' to privacy’ in democratic societies as civil-rights activist fear. This scenario is very unlikely, though, in view of the figure of 92% for false spotting.

2. If the AFR is judged as inaccurate enough to wrongly misidentify a person at a meaningful probability as we would suspect it is, we could conclude not only that deploying AFR is just waste of time and money but also that a false sense of security brought by the misguided excessive reliance on AFR could be a ‘'threat' to security’.

Incidentally, should the (2) be the case, we could extract two different observations.

(A) It could discourage civil-rights activists - It is hardly worth being called a 'threat' to our privacy - it proving only that he may be or may not be he and she may be or may not be she, say, an individual may or may not be identified correctly

(B) It could do encourage civil-rights activists - It debunks the story that AFR increases security so much that a certain level of threats-to- privacy must be tolerated.

It would be up to civil-rights activists which view point to take.

Anyway, various people could get to different conclusions from this observation. I would like to see some police conduct the empirical False Non-Match research in the street as indicated above, which could solidly establish whether “AFR is a threat to privacy though it may help security” or “AFR is a threat to both privacy and security”

Hitoshi Kokumaiの記事

ブログを見る

I take up this report today - “Facebook's metaverse plans labelled as 'dystopian' and 'a bad idea'” ...

https://aitechtrend.com/quantum-computing-and-password-authentication/ · My latest article titled ‘Q ...

Today's topic is BBC's “Facebook to end use of facial recognition software” · https://www.bbc.com/n ...

この職種に興味がある方はこちら

-

abcマートの社員staff / 販売職

次の場所にあります: Talent JP C2 - 4時間前

ABC-MART 焼津店 Shibuya City, 日本仕事内容 · ABC-MARTの店舗販売業務全般をお願いします。具体的には、●靴の接客販売 / ご案内 / レジ ●商品整理/ 補充(品出し) / お店の清掃 ●ディスプレイなど店舗作り/ シューズに合わせたオシャレなコーディネートetc.まずはお客さまに笑顔で接客できるようがんばりましょう。 ●初めてでも安心♪ 入社いただいた方には教育担当がついて、イチから教えていきます。お客様への接客・販売を経験の中でわからないことはすぐに解決できるよう先輩スタッフがしっかりフォロー 他にも、入社後60日間は、先輩との間で交換日記のようなツールを使って、日々の不安 ...

-

企画営業

次の場所にあります: Whatjobs JP C2 - 1日前

シンセリティ株式会社 吹田市, 日本【職種名】 · 【企画営業】スキルが身に着く環境で成長20代の仲間が活躍中 · **仕事内容**: · 求職者向けホームページ · テレビCMなどでも · お馴染みの有名企業の · 新規事業PR代行、 · イベントなどのPR業務の · マーケティング・営業が中心業務です。 · 扱う商材によってアイデアも様々。 · 「自分の企画で商品が面白いように売れた」 · そんな楽しさをダイレクトに感じられる仕事です。 · 知識や経験、0からでOK · 営業の初歩を学んでいってもらいながら、 · 業務を通してこの仕事の楽しさに触れていってください · 飲食店スタッフ、 ...

-

連結決算担当者

次の場所にあります: Talent JP C2 - 6日前

Renesas Electronics Koto, 日本 正規雇用求人内容 · 国際財務報告基準(IFRS)に基づく連結財務諸表および外部開示資料を作成する連結決算課において、連結財務諸表の作成(BS、PL、SS、CF)、財務会計に基づく社内レポート作成、会計処理の検討、監査対応などを担っていただきます。 · ・月次、四半期、年次での連結決算業務 · ・会社法計算書類、有価証券報告書、決算短信の作成 · ・社内幹部報告向け資料の作成 · ・グループ会計処理の立案 · ・監査法人等による監査(会計監査、J-SOX)の対応 · ※就業場所の変更の範囲、従事すべき業務の変更の範囲については、選考時に詳細をお伝えいたします。 ...

コメント