‘Harmful for security or privacy’ OR ‘Harmful for both security and privacy’

The situation still the same, I bring back an article posted 13 months ago.

From one view angle, biometrics would be harmful for ‘privacy’ if as accurate as claimed or would be harmful for ‘security’ if not so accurate.

From another view angle, biometrics is harmful for ‘both security and privacy’ irrespective of whether accurate or inaccurate.

https://www.linkedin.com/pulse/security-vs-privacy-hitoshi-kokumai

........................................................

‘Security vs Privacy’ OR ’Security & Privacy’

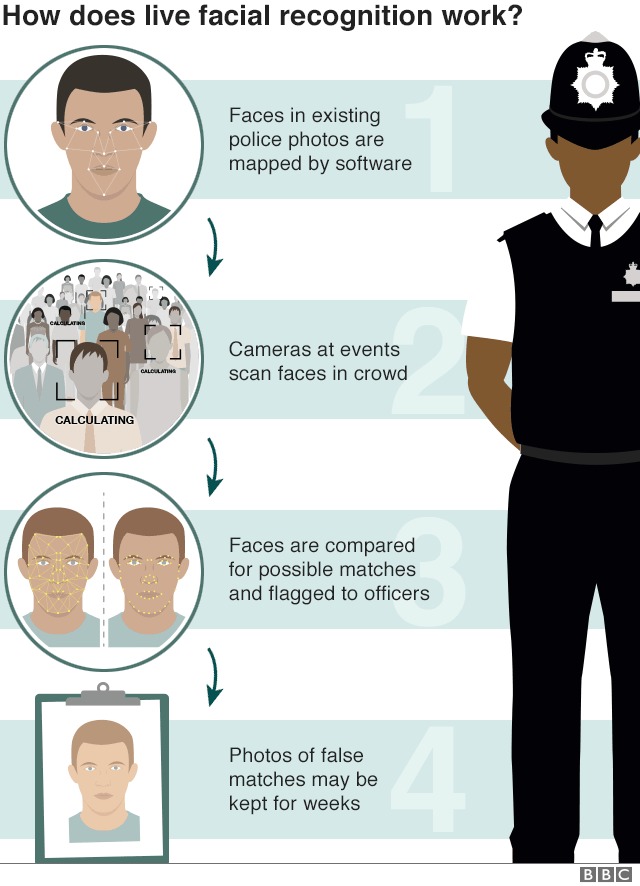

Police facial recognition surveillance court case starts ( https://www.bbc.co.uk/news/uk-48315979 )

I am interested in what is not referred to in the linked BBC report. That is, the empirical rate of target suspects not getting spotted (False Non-Match) when 92% of 2,470 potential match was wrong (False Match).

The police could have gathered such False Non-Match data in the street just easily and quickly by getting several cops acting as suspects, with some disguised with cosmetics, glasses, wigs, beards, bandages, etc. as many of the suspects are supposed to do when walking in the street.

Combining the False Match and False Non-Match data, they would be able to obtain the overall picture of the performance of the AFR (automated face recognition) in question.

1. If the AFR is judged as accurate enough to correctly identify a person at a meaningful probability, the AFR could be viewed as a serious 'threat' to privacy’ in democratic societies as civil-rights activist fear. This scenario is very unlikely, though, in view of the figure of 92% for false spotting.

2. If the AFR is judged as inaccurate enough to wrongly misidentify a person at a meaningful probability as we would suspect it is, we could conclude not only that deploying AFR is just waste of time and money but also that a false sense of security brought by the misguided excessive reliance on AFR could be a ‘'threat' to security’.

Incidentally, should the (2) be the case, we could extract two different observations.

(A) It could discourage civil-rights activists - It is hardly worth being called a 'threat' to our privacy - it proving only that he may be or may not be he and she may be or may not be she, say, an individual may or may not be identified correctly

(B) It could do encourage civil-rights activists - It debunks the story that AFR increases security so much that a certain level of threats-to- privacy must be tolerated.

It would be up to civil-rights activists which view point to take.

Anyway, various people could get to different conclusions from this observation. I would like to see some police conduct the empirical False Non-Match research in the street as indicated above, which could solidly establish whether “AFR is a threat to privacy though it may help security” or “AFR is a threat to both privacy and security”

Hitoshi Kokumaiの記事

ブログを見る

Today's topic is BBC's “Facebook to end use of facial recognition software” · https://www.bbc.com/n ...

Another topic for today is “Passwordless made simple with user empowerment” · https://www.securitym ...

I take up this report today - “Facebook's metaverse plans labelled as 'dystopian' and 'a bad idea'” ...

関連プロフェッショナル

この職種に興味がある方はこちら

-

介護職員

次の場所にあります: Talent JP C2 - 3日前

公開範囲1.等を含む求人情報を公開する Higashidori, 日本 フルタイム仕事内容 · ◇特別養護老人ホーム及びショートステイにおける介護業務全般に · 従事していただきます · ・食事、排泄、入浴介助業務 · ・レクリエーション活動等 · ・その他付随する業務全般 · *身の回り全般のサポートをする仕事です 雇用形態 正社員以外 正社員以外の名称 臨時職員 正社員登用の有無 あり 正社員登用の実績(過去3年間) なし 派遣・請負等 就業形態 派遣・請負ではない 雇用期間 雇用期間の定めなし 就業場所 就業場所 事業所所在地と同じ 〒 青森県下北郡東通村大字砂子又 ...

-

営業職(福利厚生充実/合鍵メーカー)

次の場所にあります: beBee S2 JP - 23時間前

合鍵材料・暗証番号式ダイアル錠製造・販売S社:求人コード67964 東京都, 日本 フルタイム■事業内容 · 合鍵資材の製造メーカーとして40年、日本製で高品質の合鍵をはじめ、鍵にまつわるさまざまな製品の製造・販売を行っている同社。合鍵材料の市場にてトップシェアを誇り、1000種類以上にもなるバラエティ豊かな素材と技術力を強みに、競合他社と差をつけています。鍵の紛失などのトラブルをなくす暗証番号式ダイヤル錠のほか、さまざまな錠前、自社ダイヤル錠を装備した保管庫などの設計・製造・販売・メンテナンスサービスまで一貫して行っています。 · ■私たちの身近にある合鍵のスペシャリストです♪ · 引っ越しをした際や家族が増える際など、生活のさまざまな場面で合 ...

-

総合職 技術【面接会専用求人1】

次の場所にあります: Whatjobs JP C2 - 2日前

株式会社 電業 Osaka, 日本**ハローワーク求人番号** · **受付年月日** · - 2024年1月12日**紹介期限日** · - 2024年3月31日**受理安定所** · - 布施公共職業安定所**求人区分** · - フルタイム**オンライン自主応募の受付** · - 不可**産業分類** · - その他の金属製品製造業**トライアル雇用併用の希望** · - 希望しない 求人事業所 · **事業所番号** · **事業所名** · - カブシキガイシャ デンギョウ- 株式会社 電業**所在地** · - 〒 大阪府東大阪市高井田中2-5-25 仕事内容 · **職種* ...

コメント

Hitoshi Kokumai

3年前 #2

No objection. Biometrics, when deployed unwisely, would make racism worse I am focusing on the threat of biometrics to the security of digital identity because few other people talk much about this particular issue, whereas many people are already talking about the threat of biometrics to privacy and civil rights.

Zacharias 🐝 Voulgaris

3年前 #1